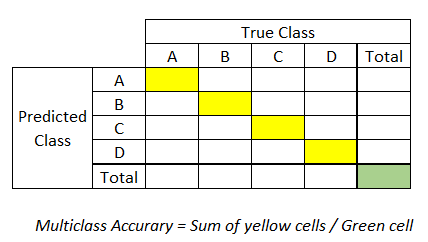

The table presents the balanced accuracy, recall, F1 score, and kappa... | Download Scientific Diagram

Detect fraudulent transactions using machine learning with Amazon SageMaker | AWS Machine Learning Blog

Balanced accuracy score, recall score, and AUC score with different... | Download Scientific Diagram

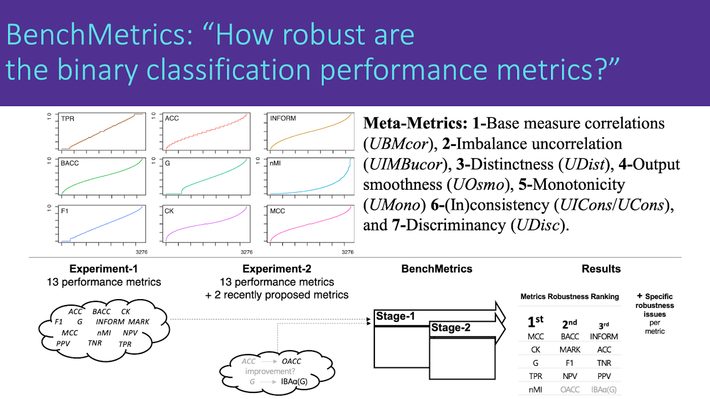

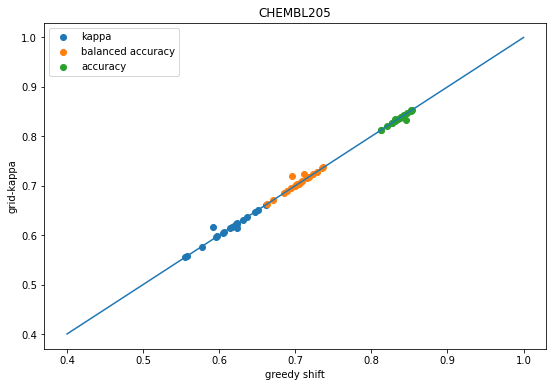

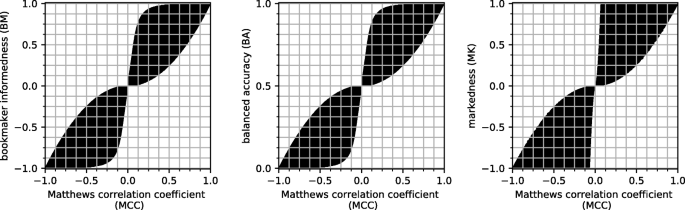

The Matthews correlation coefficient (MCC) is more reliable than balanced accuracy, bookmaker informedness, and markedness in two-class confusion matrix evaluation | BioData Mining | Full Text

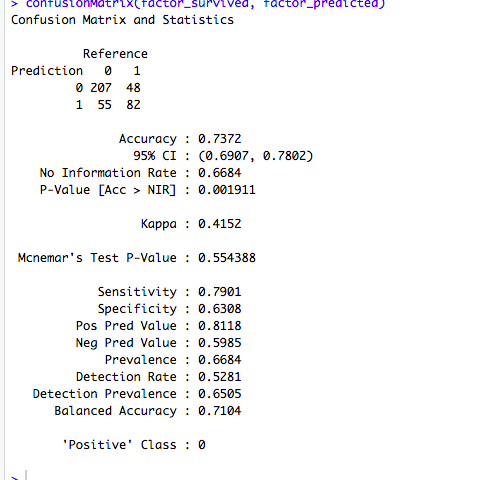

Cohen's Kappa: What it is, when to use it, and how to avoid its pitfalls | by Rosaria Silipo | Towards Data Science

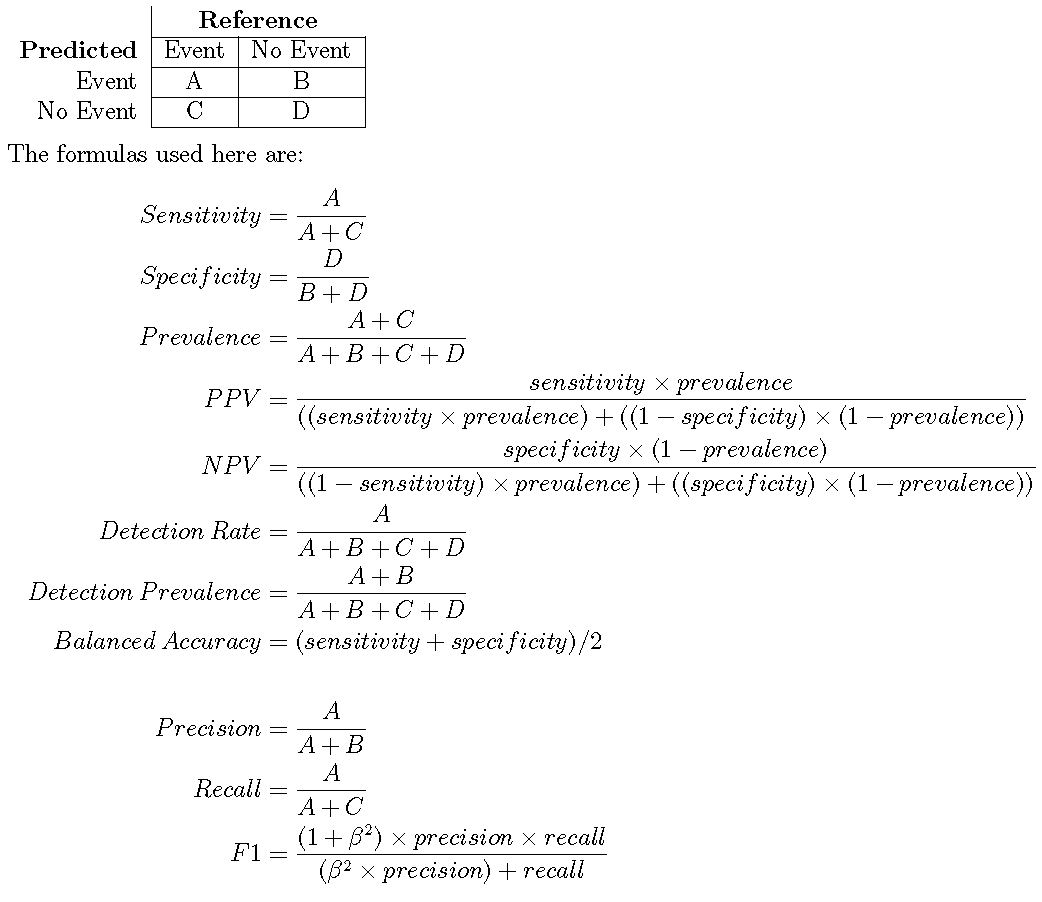

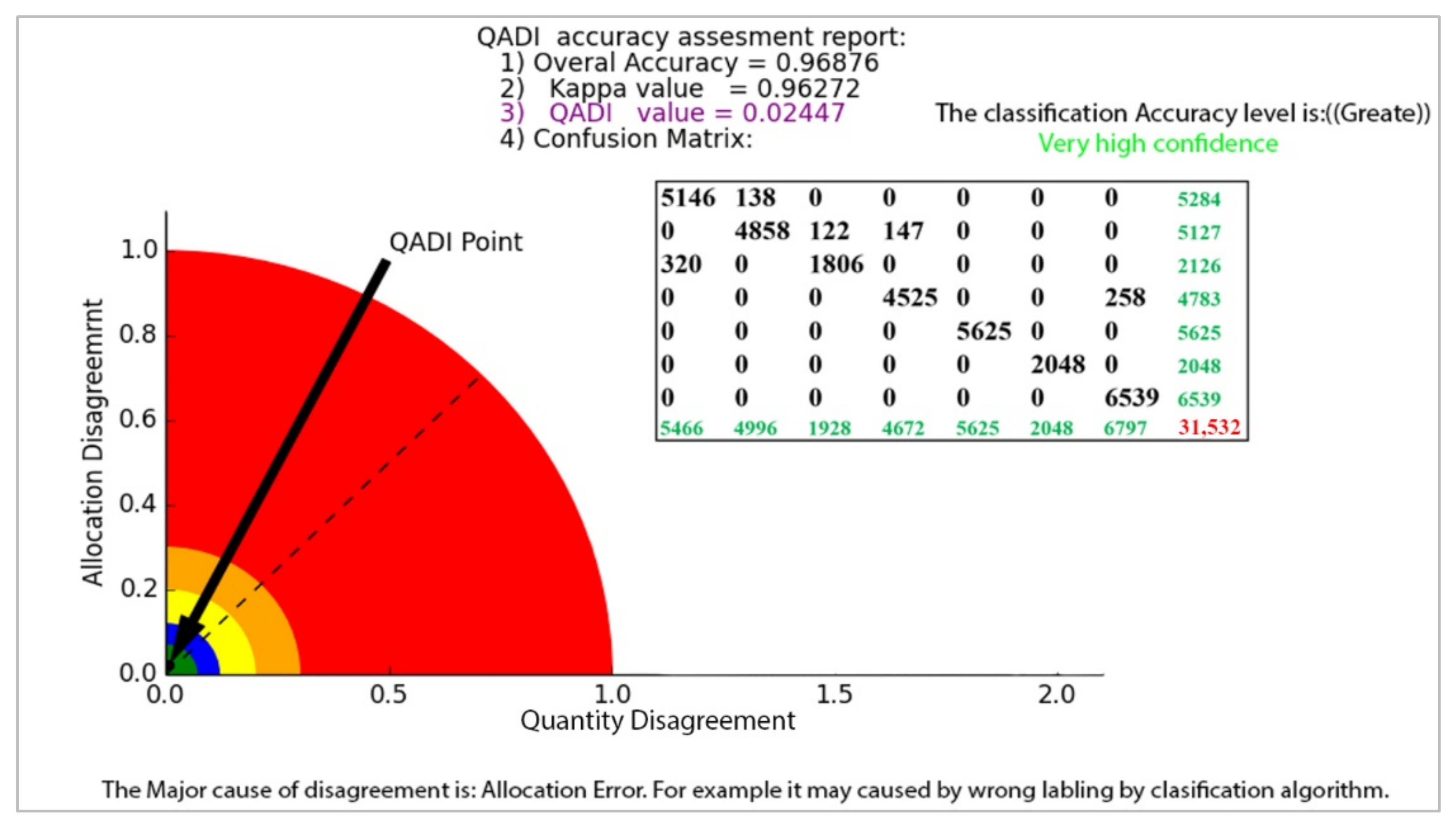

Sensors | Free Full-Text | QADI as a New Method and Alternative to Kappa for Accuracy Assessment of Remote Sensing-Based Image Classification

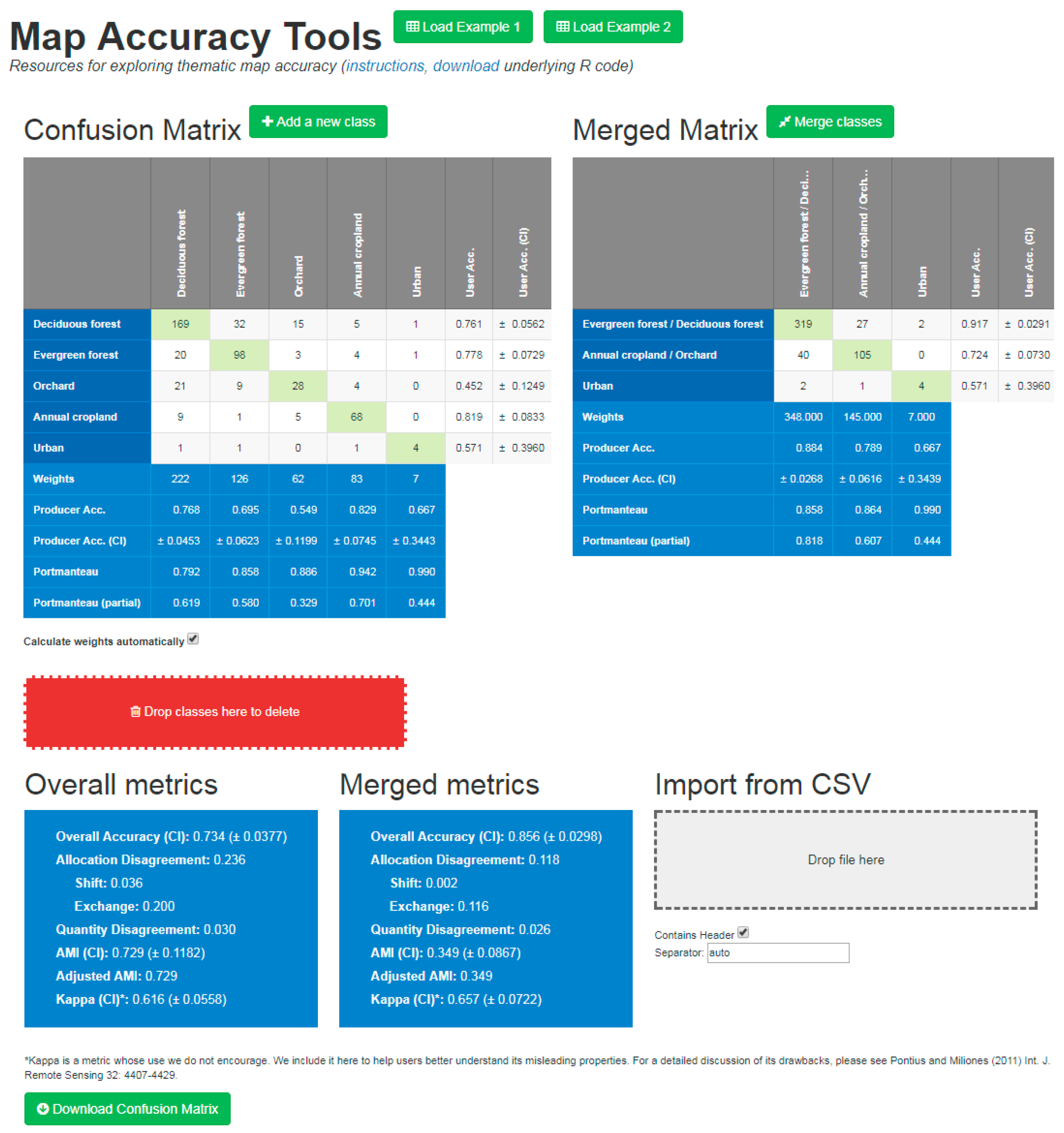

Remote Sensing | Free Full-Text | An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource

The table presents the balanced accuracy, recall, F1 score, and kappa... | Download Scientific Diagram

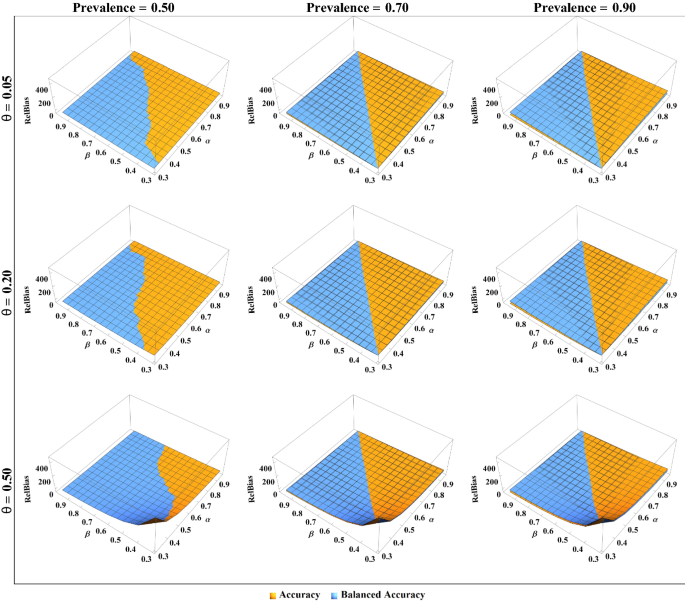

Fair evaluation of classifier predictive performance based on binary confusion matrix | Computational Statistics

![PDF] Predictive Accuracy : A Misleading Performance Measure for Highly Imbalanced Data | Semantic Scholar PDF] Predictive Accuracy : A Misleading Performance Measure for Highly Imbalanced Data | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/8eff162ba887b6ed3091d5b6aa1a89e23342cb5c/10-Table7-1.png)

PDF] Predictive Accuracy : A Misleading Performance Measure for Highly Imbalanced Data | Semantic Scholar

What does the Kappa statistic measure? - techniques - Data Science, Analytics and Big Data discussions

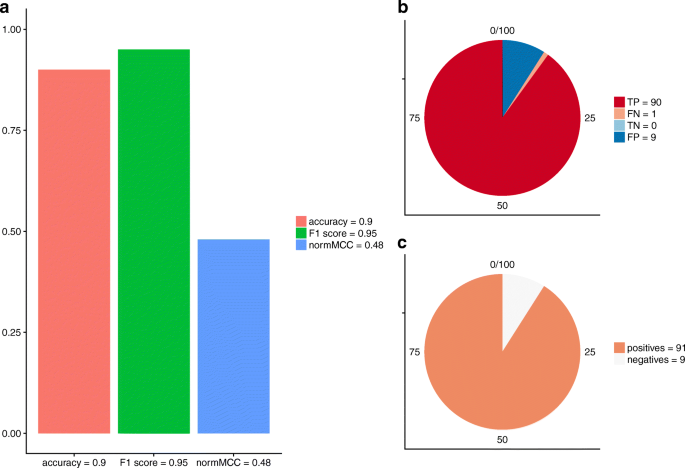

The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation | BMC Genomics | Full Text