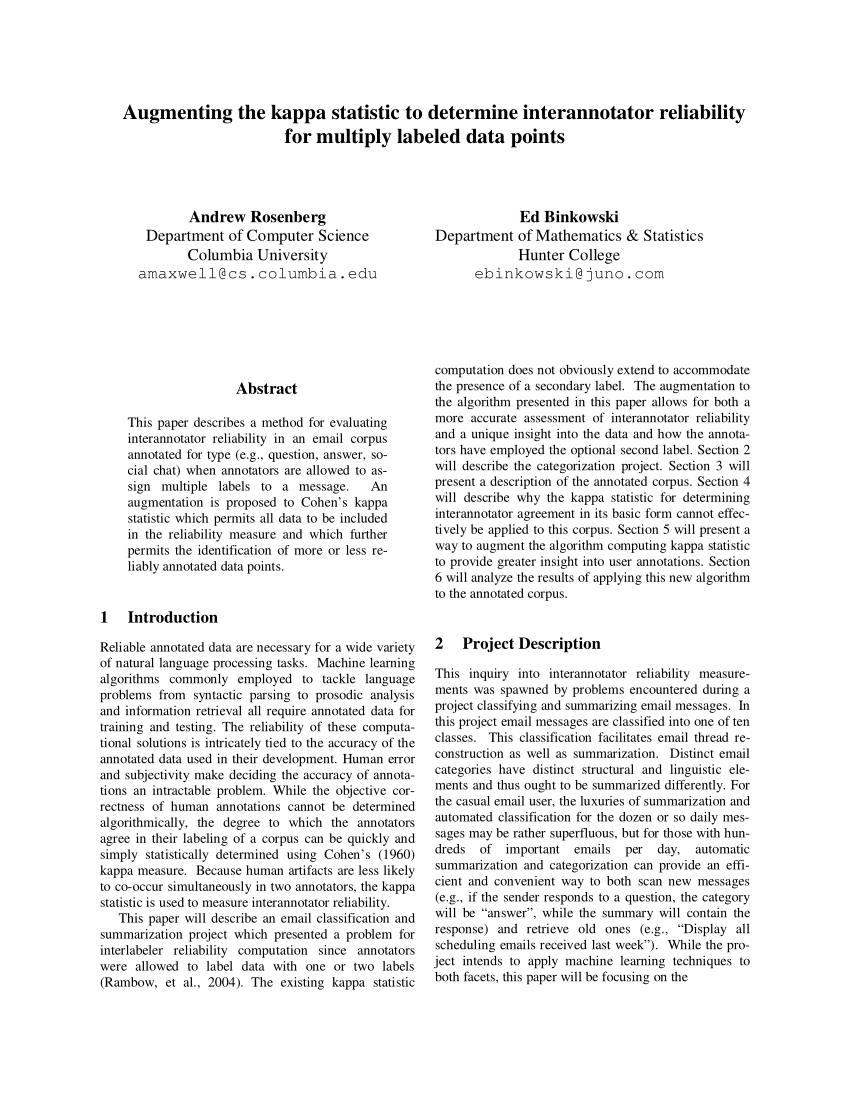

PDF) Augmenting the kappa statistic to determine interannotator reliability for multiply labeled data points

Arabic Sentiment Analysis of YouTube Comments: NLP-Based Machine Learning Approaches for Content Evaluation

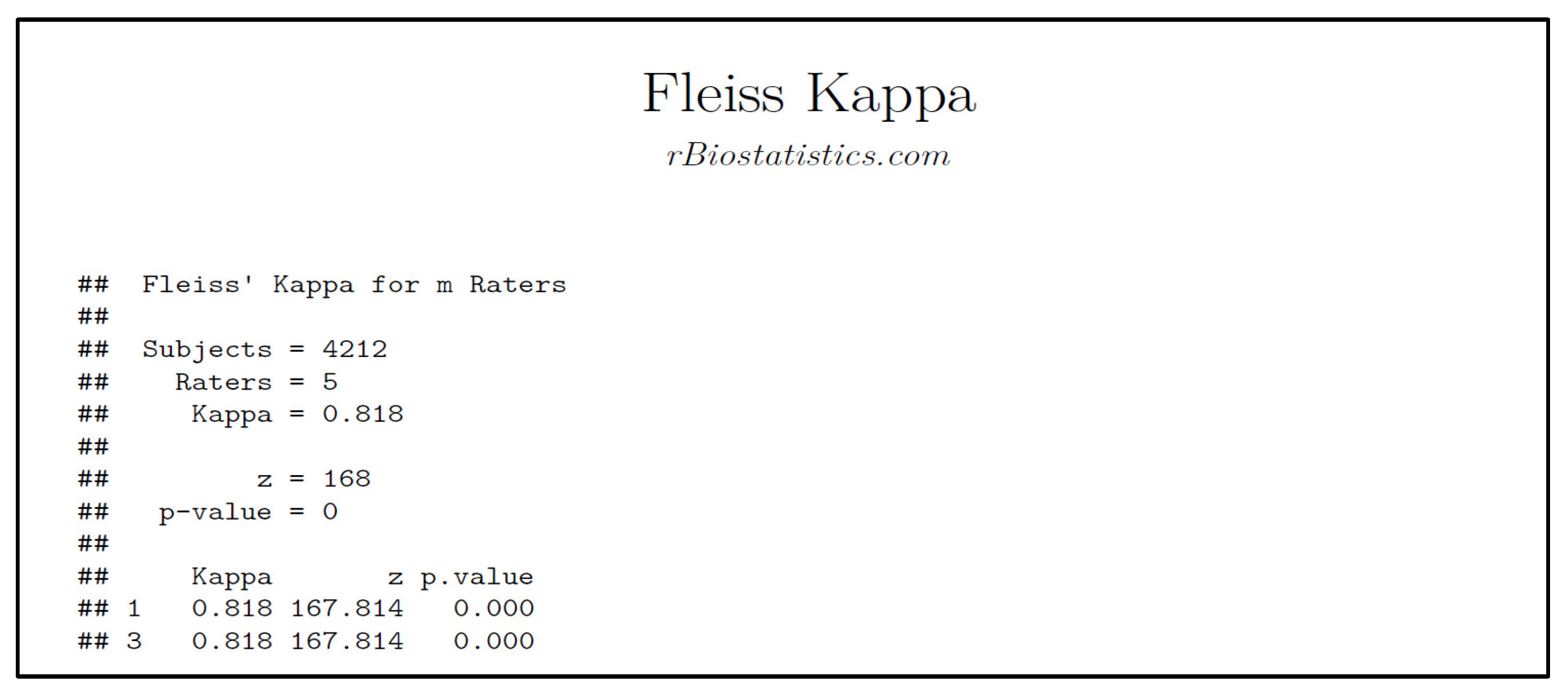

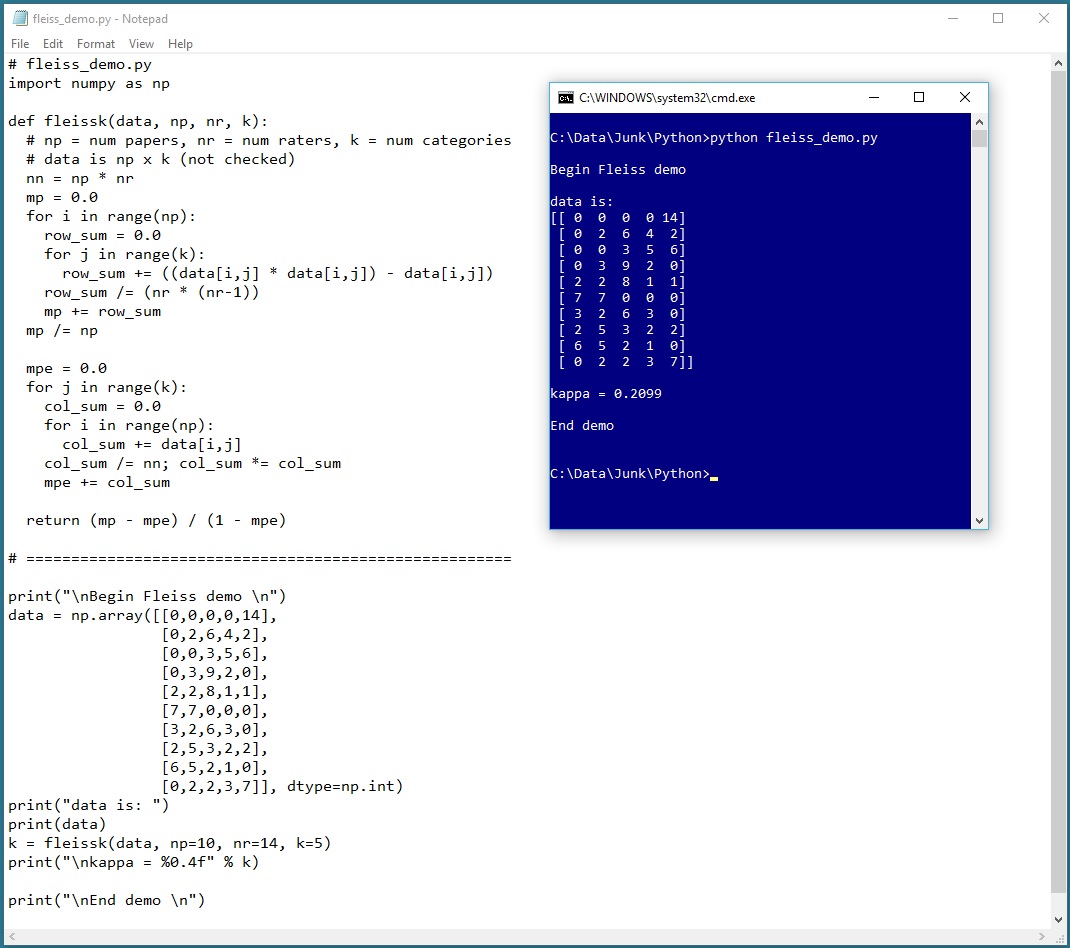

Adding Fleiss's kappa in the classification metrics? · Issue #7538 · scikit -learn/scikit-learn · GitHub

Identifying factors that shape whether digital food marketing appeals to children | Public Health Nutrition | Cambridge Core

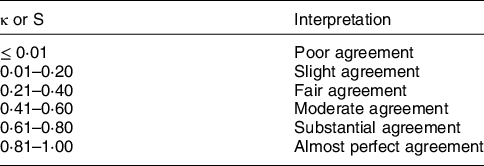

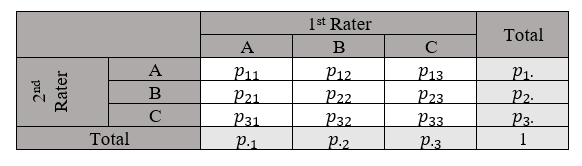

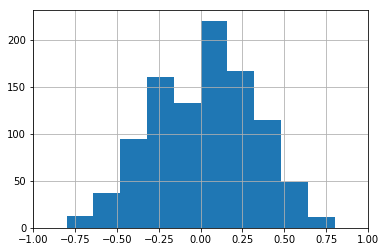

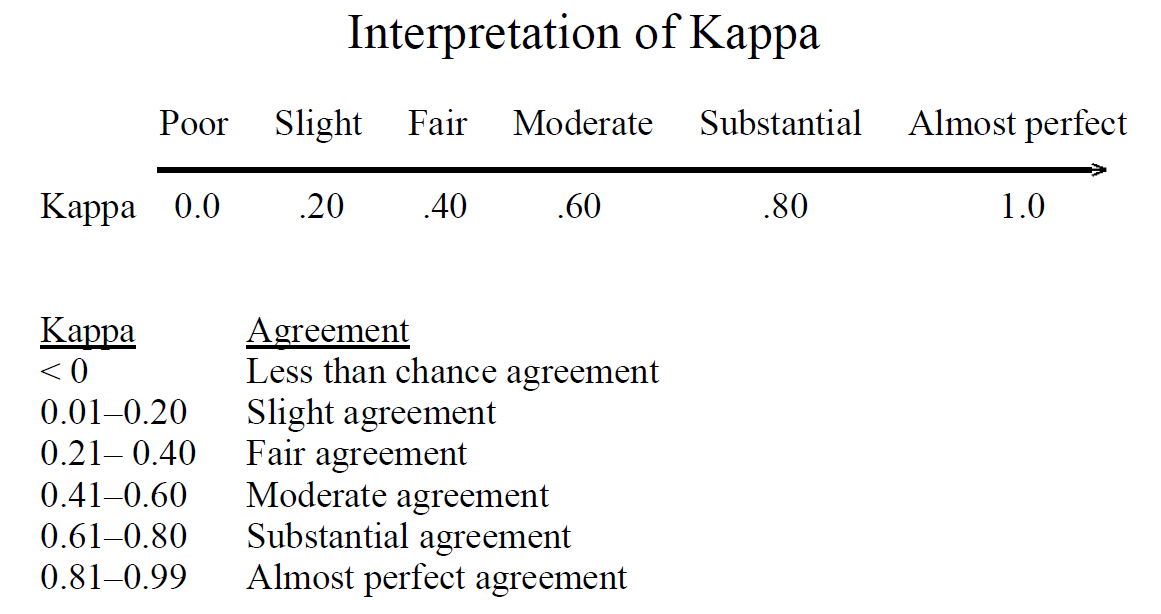

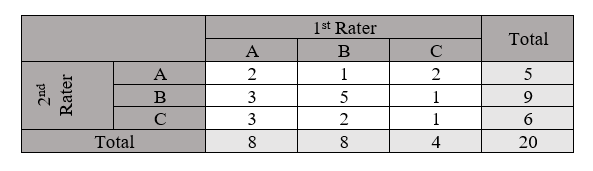

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

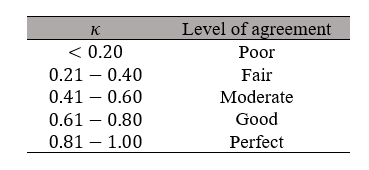

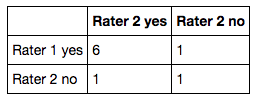

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

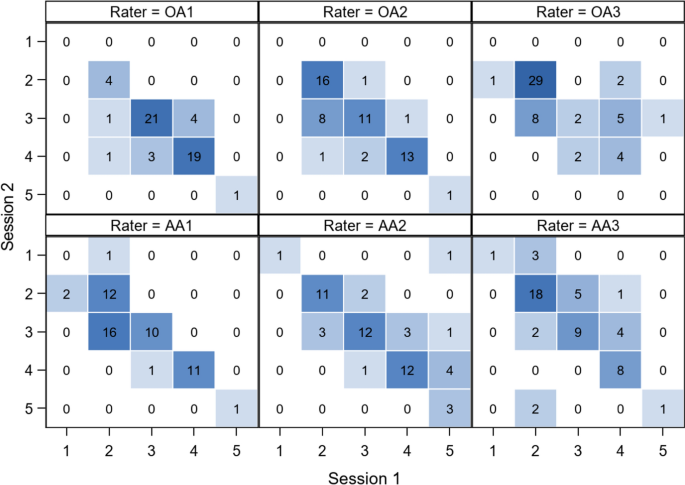

Cancers | Free Full-Text | Deep Learning Models for Automated Assessment of Breast Density Using Multiple Mammographic Image Types

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

BDCC | Free Full-Text | Arabic Sentiment Analysis of YouTube Comments: NLP-Based Machine Learning Approaches for Content Evaluation

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

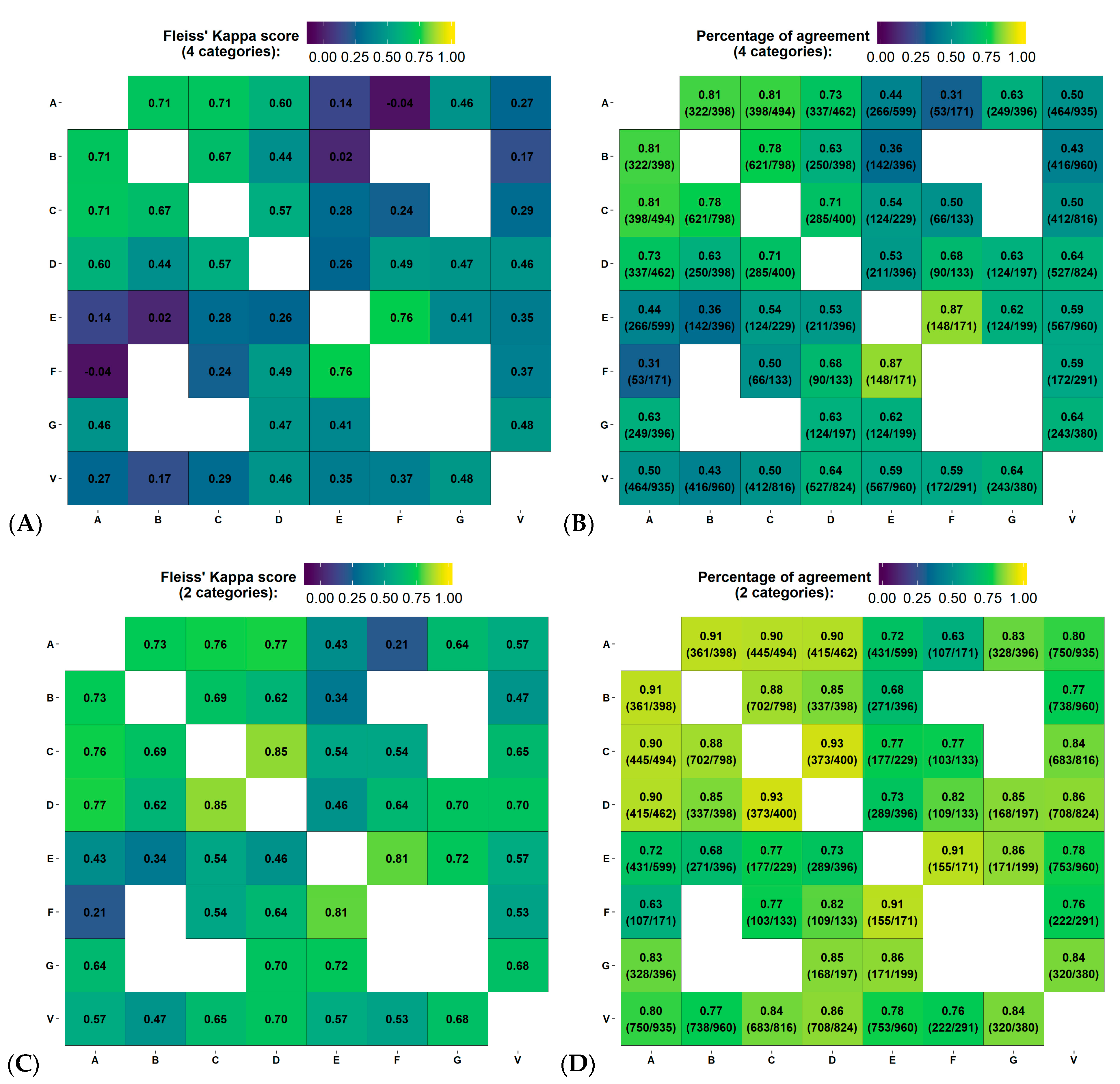

Inter-observer proportion of agreement (PoA), Fleiss' kappa coefficient... | Download Scientific Diagram

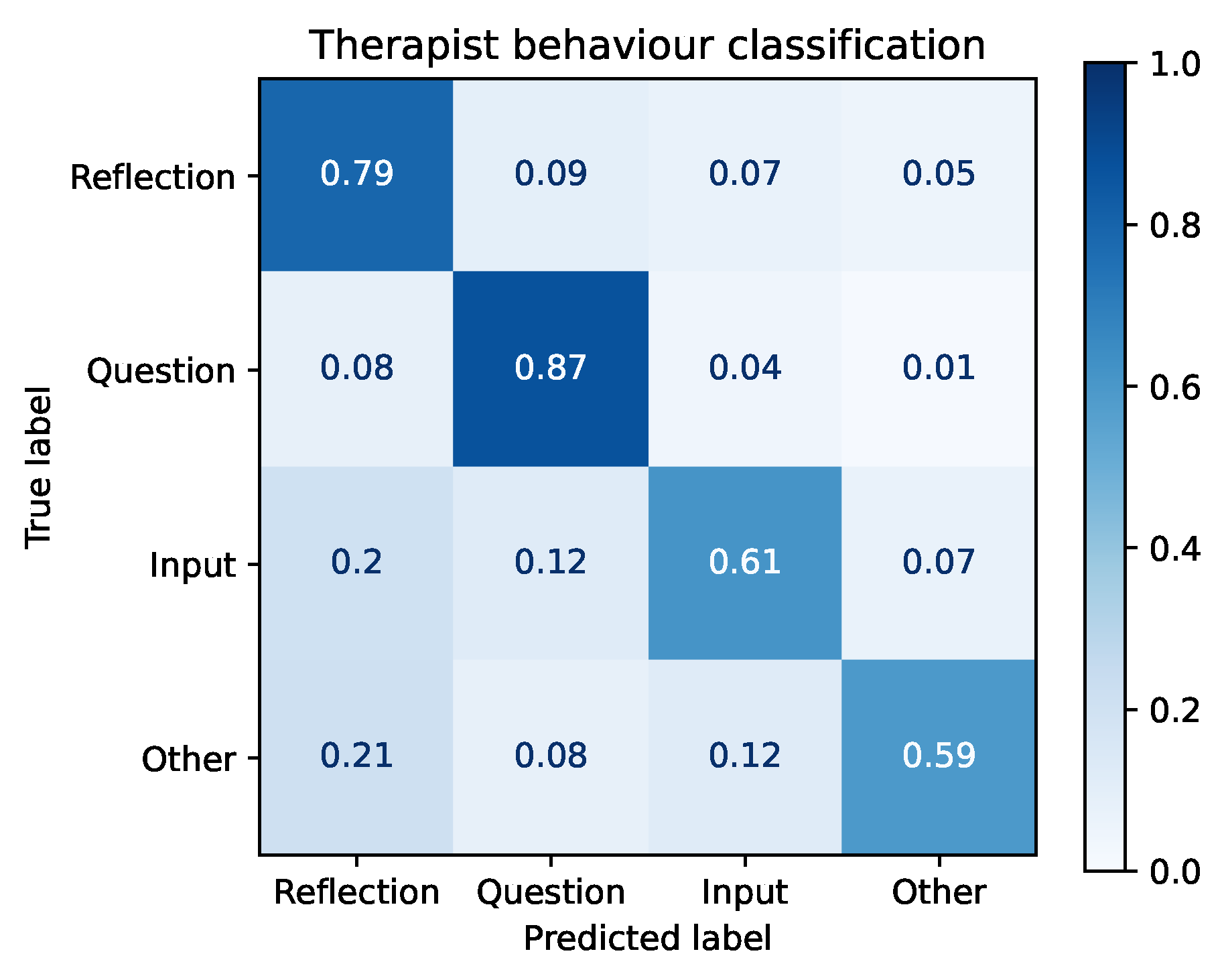

Future Internet | Free Full-Text | Creation, Analysis and Evaluation of AnnoMI, a Dataset of Expert-Annotated Counselling Dialogues

![RuSentiTweet: a sentiment analysis dataset of general domain tweets in Russian [PeerJ] RuSentiTweet: a sentiment analysis dataset of general domain tweets in Russian [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2022/cs-1039/1/fig-4-full.png)